FACIAL RECOGNITION WITH PYTHON

Facial detection and recognition is one of the areas of computer vision where research is actively being conducted.

Applications of facial recognition include facial unlocking, security and defense, etc. Doctors and health officials use facial recognition to access medical records and patient histories and better diagnose diseases.

In this python project, we will build a machine learning model that recognizes people from an image. We use the face_recognition API and OpenCV in our project.

Before we proceed, let's look at facial recognition and detection. Facial recognition is the process of identifying or verifying a person's face from photos and video frames. Face detection is defined as the process of locating and extracting faces (location and size) in an image for use by a face detection algorithm. The face recognition method is used to locate uniquely specified entities in the image. The facial image has already been removed, cropped, scaled and converted to grayscale in most cases. Facial recognition involves 3 steps: face detection, feature extraction, facial recognition.

1- Prepare the data set: Create 2 directories, train and test. Choose an image for each of the French government members from the Internet and upload it to our "train" directory. Make sure that the images you have selected show the facial features well enough for the classifier. To test the model, let's take a photo containing the entire cast and place it in our "test" directory.

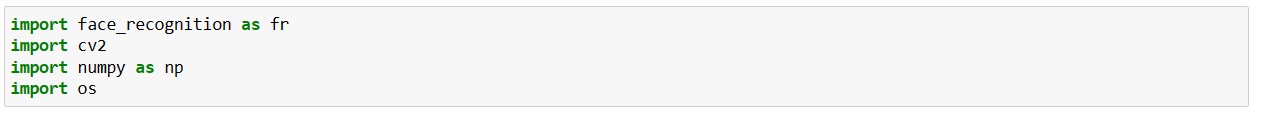

2- Train the model: First import the necessary modules:

The face_recognition library contains the implementation of various utilities that help in the face recognition process.

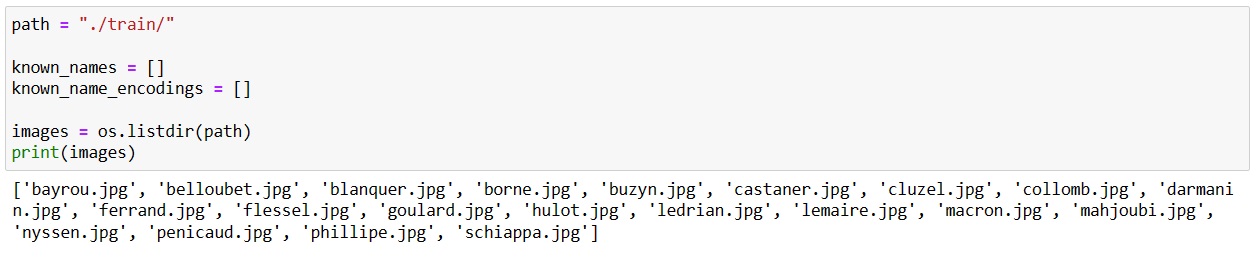

Now create 2 lists that store the names of the images (people) and their respective face encodings.

Face encoding is a vector of values representing important measurements between distinctive features of a face such as distance between eyes, width of forehead, etc.

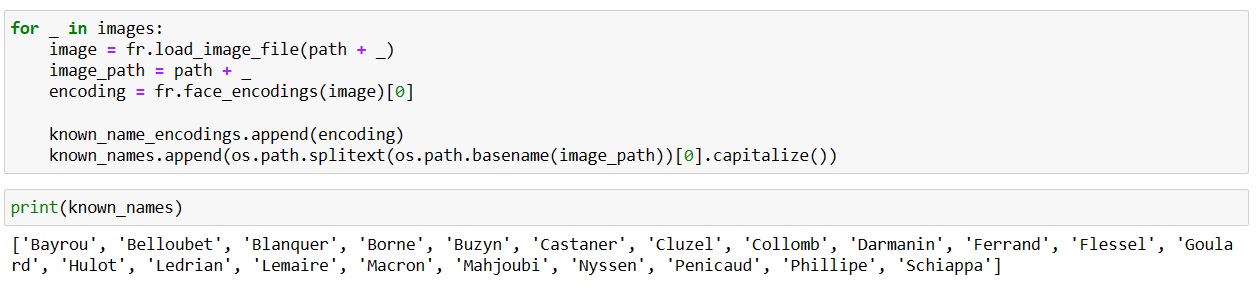

We loop through each of the images in our train directory, extract the name of the person in the image, compute their face encoding vector and store the information in the respective lists.

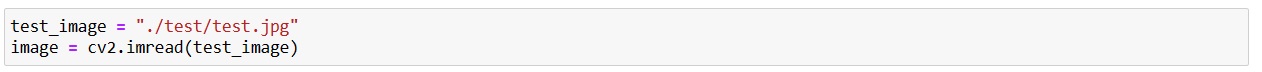

3- Test the model on the test data set:

As mentioned above, our test dataset contains only 1 image with all the people in it.

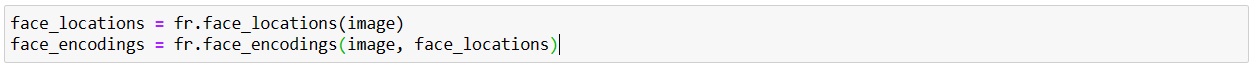

The face_recognition library provides a useful method called face_locations() that locates the coordinates (left, bottom, right, top) of each face detected in the image. Using these location values, we can easily find the face encodings.

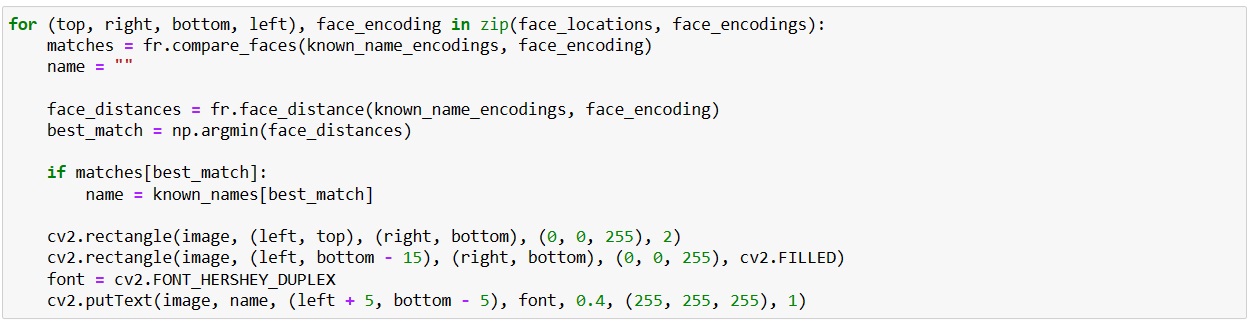

We loop through each of the face locations and its encoding found in the image. Then we compare this encoding with the encodings of the faces in the "train" dataset.

Then compute the face distance, which means that we compute the similarity between the encoding of the test image and the encoding of the train images. Now, we choose the minimum distance valued from it indicating that this face of the test image is one of the people in the training dataset.

Now draw a rectangle with the location coordinates of the face using the methods in the cv2 module.

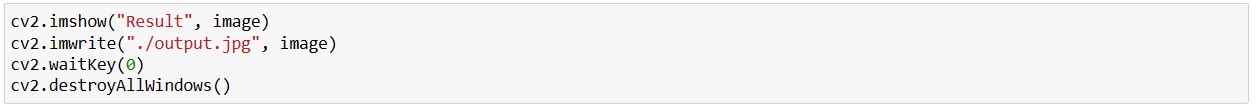

Display the image using the imshow() method of the cv2 module. Save it to our current working directory using the imwrite() method cv2.imwrite("./output.jpg", image).and free the resources that have not been deallocated (if any).

Let's see the output of the model.

In this machine learning project, we developed a facial recognition model in python and opencv using our own custom dataset.